The data center is getting a makeover. The nondescript industrial buildings once hummed away largely behind the scenes, powering the various facets of our online lives.

With AI, that’s all changing. Companies like Meta, Microsoft, Google, Amazon, and OpenAI are spending billions (with plans for trillions) to build a new breed of data center designed for the AI age: supersized behemoths with vast appetites for energy and water, and a far bigger impact on the communities where they are located.

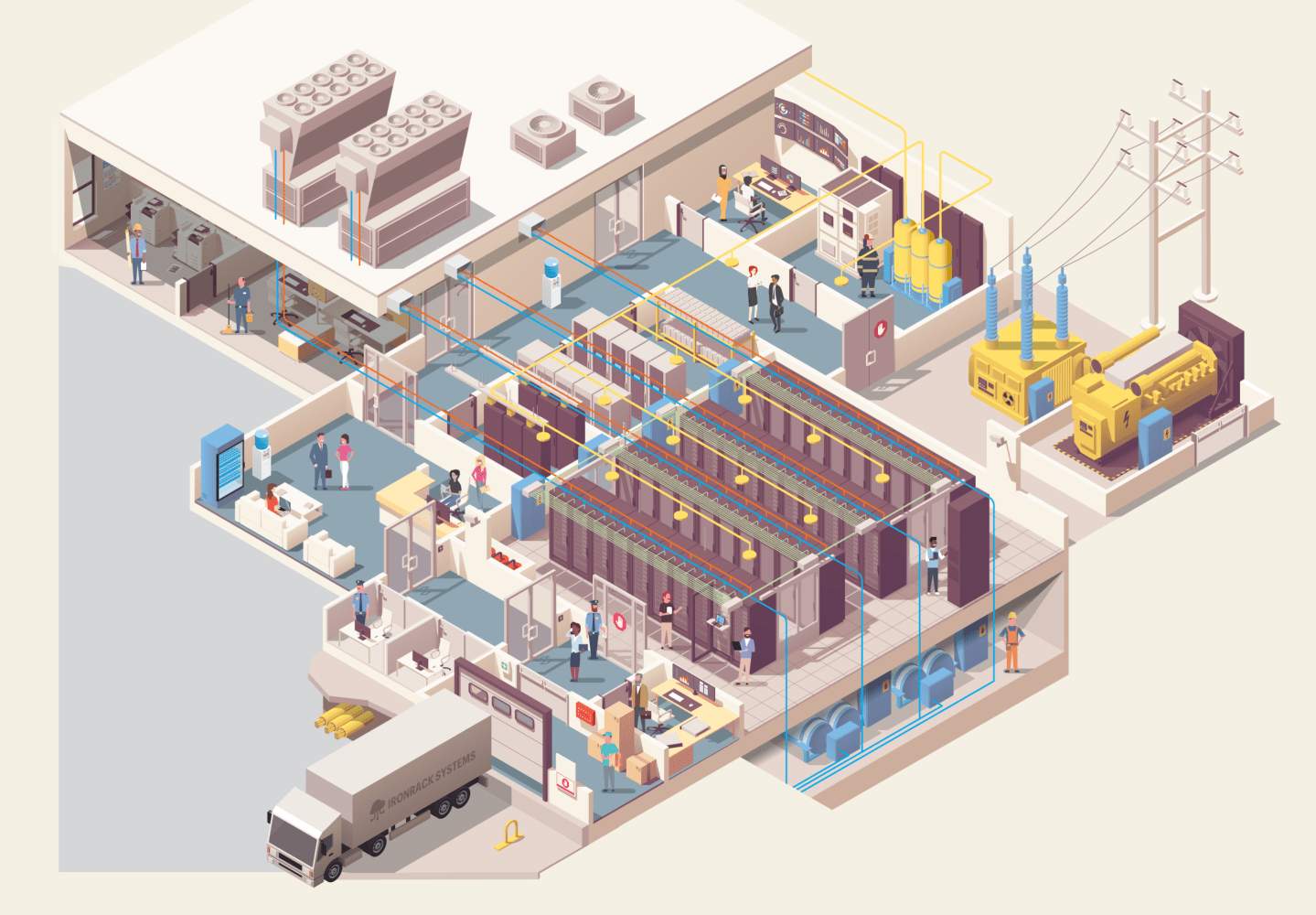

That’s because modern AI runs on vast clusters of tens of thousands of heavy, power-hungry graphics processing units (GPUs) running continuous calculations to train, or run, AI models, not traditional consumer or enterprise applications. Those clusters generate intense heat that demands industrial-scale cooling and direct access to large amounts of power, land, and water.

While a pre-AI-era data center might have spanned 100,000 to 300,000 square feet in a single building located in a city, today’s mega AI data centers are breaking ground in Texas Hill Country, the Arizona desert, or the wilds of Wyoming. They can span millions of square feet across hundreds or even thousands of acres (think dozens of football fields or city blocks), and need hundreds of megawatts, or even a gigawatt, of power.

The buildings are packed with head-spinning amounts of material (OpenAI says the fiber-optic cable in its Stargate data center in Abilene, Texas, could circle the Earth 16 times) and designed from the ground up for AI. The racks of tightly packed GPUs are so heavy they need to sit on concrete slabs rather than on the raised floors common in cloud-era data centers. Outside, giant cooling towers chill the heat-absorbing water that circulates throughout the facility. GPU chips (each costing between $25,000 and $40,000) need so much continuous power that tapping into existing grid capacity isn’t feasible, pushing developers to place AI data centers near power plants.

The construction phase can employ thousands of people over many months—preparing sites, raising buildings, providing electrical infrastructure, installing mechanical and cooling systems, and wiring networks and hardware. Much of that work requires highly skilled labor, and estimates show the U.S. May be hundreds of thousands of workers short of what’s needed in the coming years.

Longer term, the job picture is less clear. According to the U.S. Chamber of Commerce, a typical data center supports fewer than 200 local jobs, such as technicians, facilities staff, and security. Critics argue this number is small given the billions being invested and the excessive demands placed on local power, water, land, and public infrastructure. Unlike an automotive plant, which might create thousands of long-lasting jobs, data centers are about maximizing computing power, not human employment.

Still, other experts note that AI data centers are never really “finished.” The latest blueprints depict mega-campuses built in multiple phases over decades, while existing data centers must be retrofitted for modern AI. So although AI data centers may employ fewer permanent workers, they could function as long-term infrastructure projects that create regular waves of short-term jobs.

This article appears in the February/March 2026 issue of Coins2Day with the headline “Inside today’s AI data centers.”